The Art of Perfect Synchronization in Sound

Sound is a profound medium of communication and expression that transcends language, culture, and time. From the harmonious tunes of a symphony orchestra to the pulsating beats of electronic music, sound has the power to evoke emotions, convey stories, and unite people. Yet, behind every seamless musical performance or immersive auditory experience lies the intricate craft of synchronization. Synchronizing sound is both a science and an art, a fusion of precision and creativity that ensures every note, beat, and tone aligns to create a unified auditory masterpiece.

The Importance of Synchronization in Sound

Synchronization in sound is the backbone of any musical or auditory production. It refers to the precise alignment of various audio elements, such as instruments, vocals, effects, and even visual components in cases like film or live performances. Without synchronization, the result can be jarring, chaotic, and unpleasant to the listener.

In music, synchronization ensures that musicians playing different instruments remain in tempo and in tune with one another. Imagine a jazz band where the drummer and the bassist fail to sync; the groove collapses, and the rhythm loses its essence. Similarly, in a movie, poorly synchronized sound effects or mismatched dialogue can break the viewer’s immersion and diminish the storytelling impact. Perfect synchronization is what transforms individual sound elements into a cohesive and harmonious whole.

The Science Behind Synchronization

At its core, synchronization relies on the principles of physics and technology. The human ear is highly sensitive to timing discrepancies, even in millisecond ranges. This is why sound engineers and producers invest significant effort in ensuring timing precision during recording and post-production.

One of the foundational concepts in synchronization is the beat, the basic unit of time in music. Beats serve as the reference point for aligning rhythms, melodies, and harmonies. Technologies like metronomes and digital audio workstations (DAWs) provide tools for maintaining consistent beats. DAWs, in particular, enable sound engineers to manipulate tracks with incredible precision, ensuring every element aligns perfectly.

Synchronization also extends to frequency alignment. Ensuring that sounds occupy distinct frequency ranges prevents muddiness and enhances clarity. Equalization (EQ) is a crucial tool in this process, allowing engineers to sculpt the sound spectrum and ensure each element has its rightful place.

The Artistic Dimension of Synchronization

While technology provides the tools, the art of synchronization lies in the human touch. It’s about making choices that enhance the emotional and aesthetic impact of sound. This is especially true in creative fields like film scoring, live performances, and music production.

In film, synchronization often involves aligning sound effects and music with visual cues. For example, the iconic “shower scene” in Alfred Hitchcock’s Psycho owes much of its tension to the perfectly timed string stabs that synchronize with the visual action. Similarly, in live performances, synchronization is essential for creating a sense of unity among performers and delivering a captivating experience to the audience. Bands use in-ear monitors and click tracks to stay in sync, ensuring that every member plays their part seamlessly.

In electronic music, synchronization takes on a unique form with the use of sequencers and MIDI controllers. These tools allow producers to program beats, melodies, and effects with meticulous precision while also leaving room for improvisation and experimentation. The interplay of precision and spontaneity often results in innovative and mesmerizing compositions.

Challenges in Achieving Perfect Synchronization

Despite the advancements in technology, achieving perfect synchronization is no small feat. It requires meticulous planning, exceptional skill, and unwavering attention to detail. Several challenges can arise during the process:

- Latency: In digital audio systems, latency refers to the delay between an input signal and its output. Even minor latency issues can disrupt synchronization, especially in real-time performances.

- Human Error: In live settings, musicians and performers may deviate from the intended tempo or rhythm. While this can sometimes add a unique, organic quality to the performance, it often requires corrective measures to maintain cohesion.

- Complexity of Multi-Track Recordings: With modern productions involving dozens or even hundreds of audio tracks, ensuring perfect alignment across all elements can be a daunting task.

- Dynamic Environments: In live performances, factors like stage acoustics, crowd noise, and equipment limitations can pose significant challenges to synchronization.

Tools and Techniques for Synchronization

To overcome these challenges, professionals use a range of tools and techniques:

- Metronomes and Click Tracks: These provide a steady tempo reference for musicians and producers.

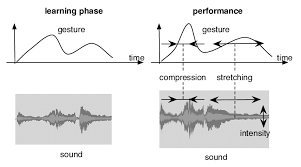

- Time-Stretching Algorithms: These allow audio tracks to be stretched or compressed without altering pitch, enabling precise alignment with other elements.

- Synchronization Software: Programs like Ableton Live, Logic Pro, and Pro Tools offer advanced features for aligning tracks and automating synchronization tasks.

- In-Ear Monitors and Stage Systems: These help performers stay in sync during live shows by providing clear audio cues and minimizing external distractions.

Synchronization in the Digital Age

The rise of digital technology has revolutionized the art of synchronization. With tools like artificial intelligence (AI) and machine learning, sound engineers can now automate complex synchronization tasks, saving time and enhancing accuracy. For instance, AI algorithms can analyze audio and video tracks, detect discrepancies, and make precise adjustments in real time.

Virtual reality (VR) and augmented reality (AR) have also pushed the boundaries of synchronization. In these immersive environments, sound synchronization is critical for creating a realistic and engaging experience. Developers use spatial audio techniques to ensure that sound sources align with their visual counterparts, providing a sense of depth and directionality.

The Future of Sound Synchronization

As technology continues to evolve, the possibilities for synchronization in sound are expanding. Innovations in quantum computing, neural networks, and immersive audio systems promise to redefine how we experience sound. These advancements will not only enhance the precision of synchronization but also open new creative avenues for artists and producers.

For instance, adaptive audio systems can dynamically synchronize sound based on user interactions, creating personalized auditory experiences. Imagine a video game where the soundtrack adjusts in real time to match the player’s actions, or a concert where the music evolves in response to audience feedback.

The art of perfect synchronization in sound is a testament to the power of human ingenuity and creativity. It is a delicate balance of science and art, precision and expression, technology and imagination. Whether it’s the intricate interplay of instruments in an orchestra, the pulsating rhythm of a DJ set, or the immersive soundscapes of a virtual world, synchronization is what brings sound to life.

As we continue to explore the possibilities of sound, one thing remains clear: synchronization will always be at the heart of our auditory experiences. It is through this artful alignment that we create not just sound, but moments of magic that resonate deeply with our emotions and connect us to one another.